- Topic

41k Popularity

20k Popularity

49k Popularity

17k Popularity

44k Popularity

19k Popularity

7k Popularity

4k Popularity

115k Popularity

29k Popularity

- Pin

- 🎊 ETH Deposit & Trading Carnival Kicks Off!

Join the Trading Volume & Net Deposit Leaderboards to win from a 20 ETH prize pool

🚀 Climb the ranks and claim your ETH reward: https://www.gate.com/campaigns/site/200

💥 Tiered Prize Pool – Higher total volume unlocks bigger rewards

Learn more: https://www.gate.com/announcements/article/46166

- 📢 ETH Heading for $4800? Have Your Say! Show Off on Gate Square & Win 0.1 ETH!

The next bull market prophet could be you! Want your insights to hit the Square trending list and earn ETH rewards? Now’s your chance!

💰 0.1 ETH to be shared between 5 top Square posts + 5 top X (Twitter) posts by views!

🎮 How to Join – Zero Barriers, ETH Up for Grabs!

1.Join the Hot Topic Debate!

Post in Gate Square or under ETH chart with #ETH Hits 4800# and #ETH# . Share your thoughts on:

Can ETH break $4800?

Why are you bullish on ETH?

What's your ETH holding strategy?

Will ETH lead the next bull run?

Or any o

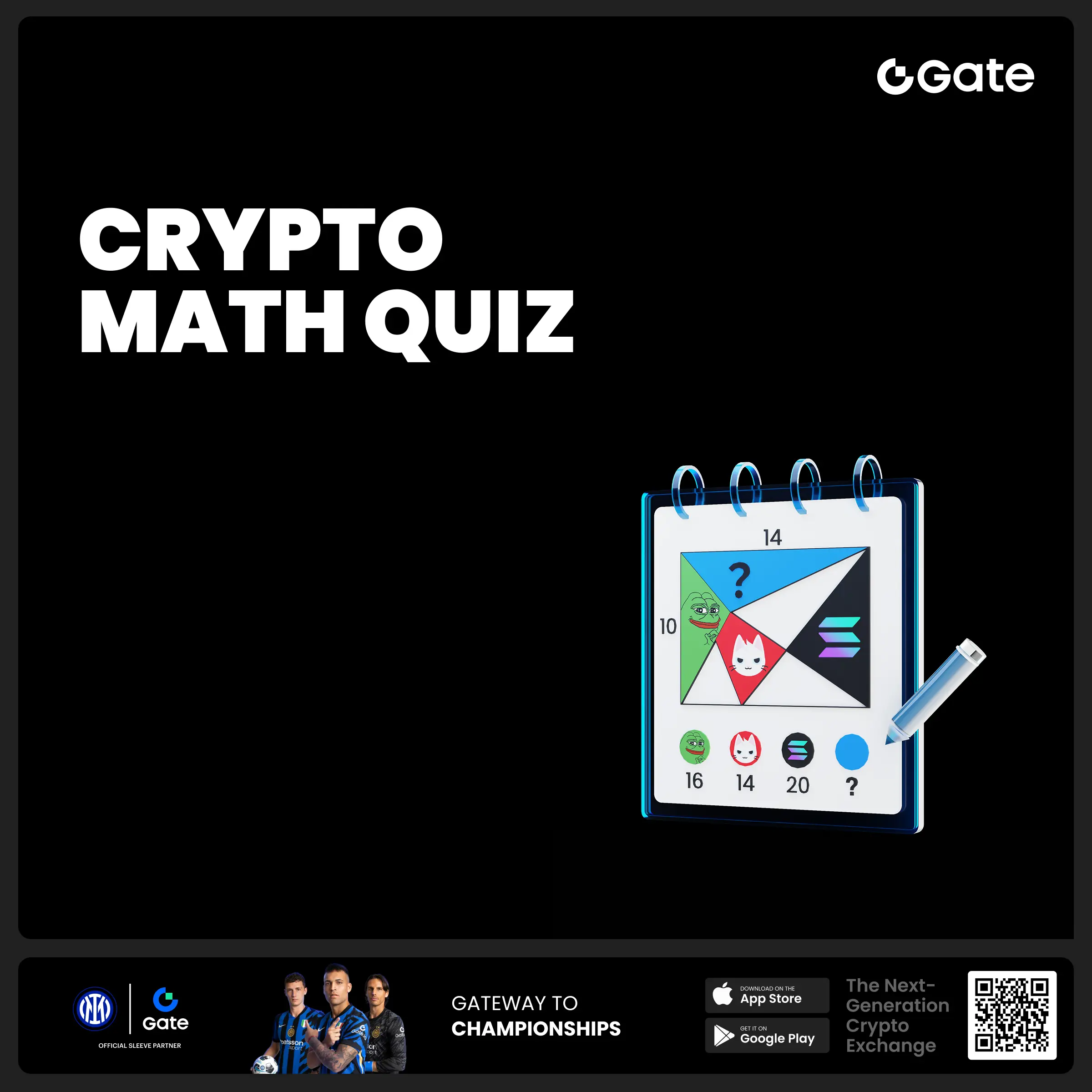

- 🧠 #GateGiveaway# - Crypto Math Challenge!

💰 $10 Futures Voucher * 4 winners

To join:

1️⃣ Follow Gate_Square

2️⃣ Like this post

3️⃣ Drop your answer in the comments

📅 Ends at 4:00 AM July 22 (UTC)

- 🎉 #Gate Alpha 3rd Points Carnival & ES Launchpool# Joint Promotion Task is Now Live!

Total Prize Pool: 1,250 $ES

This campaign aims to promote the Eclipse ($ES) Launchpool and Alpha Phase 11: $ES Special Event.

📄 For details, please refer to:

Launchpool Announcement: https://www.gate.com/zh/announcements/article/46134

Alpha Phase 11 Announcement: https://www.gate.com/zh/announcements/article/46137

🧩 [Task Details]

Create content around the Launchpool and Alpha Phase 11 campaign and include a screenshot of your participation.

📸 [How to Participate]

1️⃣ Post with the hashtag #Gate Alpha 3rd - 🚨 Gate Alpha Ambassador Recruitment is Now Open!

📣 We’re looking for passionate Web3 creators and community promoters

🚀 Join us as a Gate Alpha Ambassador to help build our brand and promote high-potential early-stage on-chain assets

🎁 Earn up to 100U per task

💰 Top contributors can earn up to 1000U per month

🛠 Flexible collaboration with full support

Apply now 👉 https://www.gate.com/questionnaire/6888

Ethereum The Surge Blueprint: Breaking the Scalability Trilemma and Enhancing L2 Performance to 100,000 TPS

Ethereum's Possible Future: The Surge

Ethereum's roadmap originally included two scalability strategies: sharding and Layer 2 protocols. These two paths eventually merged into a Rollup-centric roadmap, which remains Ethereum's current scaling strategy.

The Rollup-centered roadmap proposes a simple division of labor: Ethereum L1 focuses on becoming a powerful and decentralized base layer, while L2 takes on the task of helping the ecosystem scale. This model is common in society: the existence of the court system (L1) is to protect contracts and property rights, while entrepreneurs (L2) build on this foundation to drive development.

This year, the Rollup-centered roadmap has made significant progress: with the launch of EIP-4844 blobs, the data bandwidth of Ethereum L1 has increased dramatically, and several Ethereum Virtual Machine Rollups have entered the first phase. Each L2 exists as a "shard" with its own rules and logic, and the diversity and pluralism of shard implementation methods have now become a reality. However, this path also faces some unique challenges. Our current task is to complete the Rollup-centered roadmap, address these issues, while maintaining the robustness and decentralization of Ethereum L1.

The Surge: Key Objectives

In the future, Ethereum can achieve over 100,000 TPS through L2;

Maintain the decentralization and robustness of L1;

At least some L2 fully inherit the core properties of Ethereum: trustless, open, and censorship-resistant (;

Ethereum should feel like a unified ecosystem, rather than 34 different blockchains.

![Vitalik's New Article: The Possible Future of Ethereum, The Surge])https://img-cdn.gateio.im/webp-social/moments-6e846316491095cf7d610acb3b583d06.webp(

Chapter Contents

Scalability Triad Paradox

The scalability triangle paradox suggests that there is a contradiction between three characteristics of blockchain: decentralization, where the cost of running nodes is low ), scalability, which allows for a high volume of transactions (, and security, where an attacker needs to compromise a large portion of the nodes in the network to make a single transaction fail ).

It is worth noting that the triangle paradox is not a theorem, and posts introducing the triangle paradox do not come with a mathematical proof. It provides a heuristic mathematical argument: if a decentralized-friendly node can verify N transactions per second, and you have a chain that can process k*N transactions per second, then (i) each transaction can only be seen by 1/k nodes, which means that an attacker only needs to compromise a few nodes to carry out a malicious transaction, or (ii) your nodes will become powerful, while your chain will not be decentralized. The purpose of this article is not to prove that breaking the triangle paradox is impossible; rather, it aims to show that breaking the ternary paradox is difficult and requires, to some extent, stepping outside the framework of thought implied by the argument.

For many years, some high-performance chains have claimed to solve the trilemma without fundamentally changing the architecture, often by using software engineering techniques to optimize nodes. This is always misleading, as running a node on these chains is much more difficult than running a node on Ethereum.

However, the combination of data availability sampling and SNARKs does indeed solve the triangle paradox: it allows clients to verify that a certain amount of data is available and that a certain number of computation steps are executed correctly with only a small amount of data downloaded and minimal computation performed. SNARKs are trustless. Data availability sampling has a subtle few-of-N trust model, but it retains the fundamental property of non-scalable chains that even a 51% attack cannot force bad blocks to be accepted by the network.

Another way to solve the three difficulties is the Plasma architecture, which cleverly uses technology to incentivize users to take on the responsibility of monitoring data availability. Back in 2017-2019, when we only had fraud proofs as a means to scale computational capacity, Plasma was very limited in secure execution. However, with the popularity of SNARKs, the Plasma architecture has become more feasible for a wider range of use cases than ever before.

Further Progress on Data Availability Sampling

( What problem are we solving?

On March 13, 2024, when the Dencun upgrade goes live, the Ethereum blockchain will have 3 blobs of approximately 125 kB each for every 12-second slot, or about 375 kB of available bandwidth per slot. Assuming transaction data is published directly on-chain, an ERC20 transfer is approximately 180 bytes, thus the maximum TPS for Rollups on Ethereum is: 375000 / 12 / 180 = 173.6 TPS.

If we add the theoretical maximum value of Ethereum's calldata ): 30 million Gas per slot / 16 gas per byte = 1,875,000 bytes per slot (, it becomes 607 TPS. Using PeerDAS, the number of blobs may increase to 8-16, which will provide 463-926 TPS for calldata.

This is a significant upgrade to Ethereum L1, but it's not enough. We want more scalability. Our medium-term goal is 16 MB per slot, which, combined with improvements in Rollup data compression, will bring about ~58000 TPS.

) What is it? How does it work?

PeerDAS is a relatively simple implementation of "1D sampling". In Ethereum, each blob is a 4096-degree polynomial over a 253-bit prime field. We broadcast the shares of the polynomial, where each share contains 16 evaluation values from 16 adjacent coordinates out of a total of 8192 coordinates. Among these 8192 evaluation values, any 4096 of ### can restore the blob based on the currently proposed parameters: any 64 of the 128 possible samples of (.

The working principle of PeerDAS is to allow each client to listen to a few subnets, where the i-th subnet broadcasts the i-th sample of any blob, and requests the blobs it needs from other subnets by asking the peers in the global p2p network ) who will listen to different subnets ###. A more conservative version, SubnetDAS, uses only the subnet mechanism without additional inquiries to the peer layer. The current proposal is for nodes participating in proof of stake to use SubnetDAS, while other nodes (, i.e., clients ), use PeerDAS.

Theoretically, we can scale a "1D sampling" quite large: if we increase the maximum number of blobs to 256( with a target of 128), then we can achieve a target of 16MB, and with each node in data availability sampling having 16 samples * 128 blobs * 512 bytes per sample per blob = 1 MB of data bandwidth per slot. This is barely within our tolerance: it is feasible, but it means that bandwidth-constrained clients cannot sample. We can optimize this to some extent by reducing the number of blobs and increasing the size of the blobs, but this will make the reconstruction costs higher.

Therefore, we ultimately want to take it a step further and perform 2D sampling. This method not only samples randomly within the blob but also samples randomly between blobs. By utilizing the linear properties of KZG commitments, a set of new virtual blobs is used to expand the blob set within a block, and these virtual blobs redundantly encode the same information.

Therefore, in the end, we want to take it a step further and perform 2D sampling, which randomly samples not only within the blobs but also between the blobs. The linear property of KZG commitments is used to extend a set of blobs within a block, which contains a new virtual blob list that redundantly encodes the same information.

It is crucial that the expansion of computation commitments does not require a blob, making this scheme fundamentally friendly to distributed block construction. The nodes that actually construct the blocks only need to have the blob KZG commitment, and they can rely on data availability sampling (DAS) to verify the availability of data blocks. One-dimensional data availability sampling (1D DAS) is also essentially friendly to distributed block construction.

( What else needs to be done? What are the trade-offs?

Next is the implementation and launch of PeerDAS. After that, the number of blobs on PeerDAS will continue to increase, while carefully monitoring the network and improving the software to ensure security; this is a gradual process. At the same time, we hope for more academic work to standardize PeerDAS and other versions of DAS and their interactions with issues such as security of fork choice rules.

In the further stages of the future, we need to do more work to determine the ideal version of the 2D DAS and prove its security properties. We also hope to eventually transition from KZG to a quantum-safe alternative that does not require a trusted setup. Currently, it is still unclear which candidates are friendly for distributed block construction. Even with expensive "brute force" techniques, such as using recursive STARKs to generate validity proofs for reconstructing rows and columns, it is not sufficient to meet the demands, because technically, the size of a STARK is O)log(n) * log###log(n() hash values ( using STIR(, but in practice, the STARK is almost as large as the entire blob.

The long-term realistic path that I believe is:

Please note that even if we decide to expand execution directly on the L1 layer, this option still exists. This is because if the L1 layer is to handle a large volume of TPS, L1 blocks will become very large, and clients will want an efficient way to verify their correctness. Therefore, we will have to use the same technologies on the L1 layer as Rollup), such as ZK-EVM and DAS).

( How to interact with other parts of the roadmap?

If data compression is achieved, the demand for 2D DAS will decrease, or at least be delayed, and if Plasma is widely adopted, the demand will further decrease. DAS also poses challenges to distributed block construction protocols and mechanisms: although DAS is theoretically friendly to distributed reconstruction, in practice, this needs to be combined with the package inclusion list proposal and the surrounding fork choice mechanism.

![Vitalik's New Article: The Possible Future of Ethereum, The Surge])https://img-cdn.gateio.im/webp-social/moments-5d1a322bd6b6dfef0dbb78017226633d.webp(

Data Compression

) What problem are we solving?

Each transaction in a Rollup consumes a large amount of on-chain data space: an ERC20 transfer requires about 180 bytes. Even with ideal data availability sampling, this limits the scalability of Layer protocols. Each slot is 16 MB, we get:

16000000 / 12 / 180 = 7407 TPS

What if we could not only solve the problems of the numerator but also the problems of the denominator, allowing each transaction in the Rollup to occupy fewer bytes on-chain?

What is it and how does it work?

In my opinion, the best explanation is this picture from two years ago:

In zero-byte compression, each long sequence of zero bytes is replaced with two bytes that indicate how many zero bytes there are. Furthermore, we leverage specific properties of transactions:

Signature Aggregation: We switch from ECDSA signatures to BLS signatures. The characteristic of BLS signatures is that multiple signatures can be combined into a single signature, which can prove the validity of all the original signatures. At the L1 layer, due to the high computational cost of verification even with aggregation, BLS signatures are not considered. However, in an L2 environment where data is scarce, using BLS signatures makes sense. The aggregation feature of ERC-4337 provides a way to achieve this.

Use po